Well, probably (I certainly have).

A typical requirement is to show random products on the home page, random products of a category on the category page, or similar. Magento offers a simple block that can show a specified number of random products, Mage_Catalog_Block_Product_List_Random. To add filtering, like by category, you still need to write your own block, but it could easily be extended.

This is the CMS code:

{{block type="catalog/product_list_random" num_products="4"}}

But don’t get too excited. This block type will make your site slow, you should not use it. The problem is in this line:

$collection->getSelect()->order('rand()');

I’ve been recently advocating against using ORDER BY RAND() to select random products from a Magento database. 1 Generally, this method is discouraged because of its performance issues.

In the article linked above, Ben Scholzen suggests preselecting random ids between the smallest and greatest id, but the problem with this approach is that it only works well on tables without gaps in the id column. As soon as you have gaps, you need a query like this:

SELECT `column` FROM `table` WHERE `id` >= @rand_id

ORDER BY `id` ASC LIMIT 1

And then the result is biased towards items after the gap. Imagine a table with ids 1, 2, 5, 6. Roll a dice and select the next greatest id: for 3, 4 and 5 the result is 5.

Performant, unbiased alternatives

The alternative that I have used first, was to load all ids from which I want to select the random products: I apply my filters (like store filter and visibility filter) but don’t select any attributes or join other additional tables yet, then use getAllIds() to select only the product ids into an array.

This array gets shuffled with array_shuffle and then, to select n ids from it, sliced the first n array elements with array_slice.

It was an improvement, but not as much as I hoped for. Obviously shuffling a large array takes some time. But since I don’t need all elements to be shuffled, there had to be a more effective method. In this article I analyzed alternatives and found this to be the best solution to draw a small number $k of elements from a big array $array:

$randomArray = [];

$maxKey = count($array)-1;

while (count($randomArray) < $k)) {

$randomKey = mt_rand(0, $maxKey);

$randomArray[$randomKey] = $array[$randomKey];

}

First Benchmarks

To compare the actual difference in practice, I ran benchmarks, following these steps:

- Created a Magento 1.9.1 instance, using my Simple Magento Boilerplate Vagrantbox

- Generated a catalog with 10 categories and 100000 products, using Ryaan Anthony’s build_catalog script

- Build index

- Disable Cache and Flat Index

- Repeatedly run script that loads a product collection (limited to 4 products) with one of the methods, measure time taken and memory used.

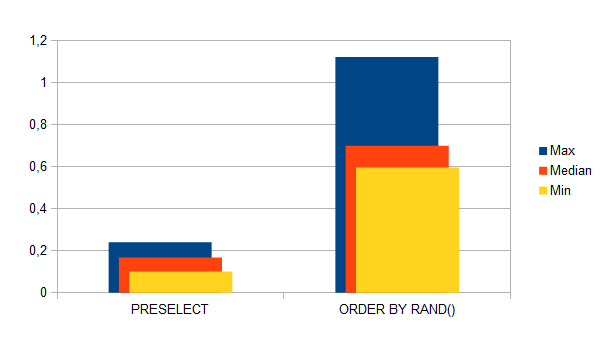

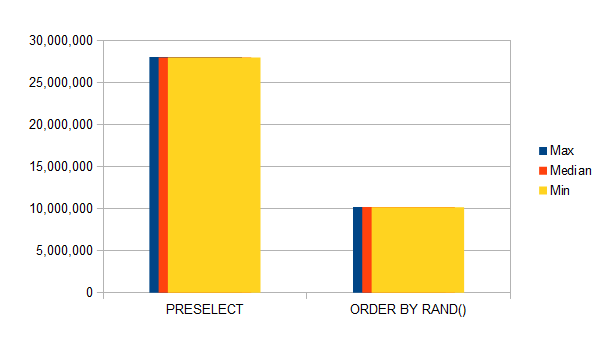

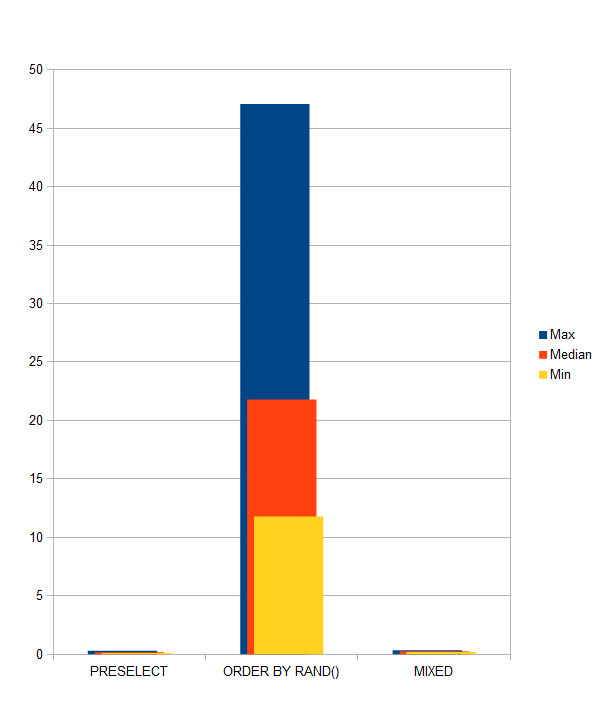

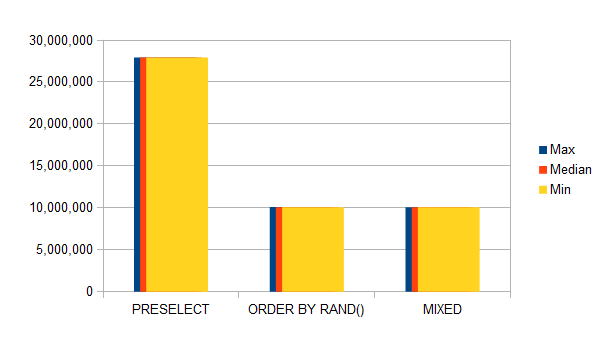

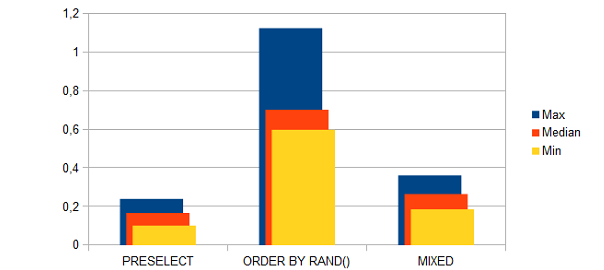

I ran the benchmark script once for both methods without counting to warm up the Opcode cache, then 100 times each. The results are shown below:

You see that the mean time is 4 times better with random preselection (0.17s / 0.70s) and the best time even 6 times better (0.10s / 0.60s).

The downside is, the preselection needs more than 2.5 times the memory for 100,000 products (28 MiB / 10 MiB). With catalog sizes up to lower 10,000s the effect is not that big, but if we go beyond the 100,000 this will be a problem (more on different catalog sizes below).

The best of both worlds

A comment on reddit made me think, if I am really on the right path:

Interesting! I […] couldn’t find a circumstance where even just fetching the IDs from the DB was as fast as doing the order/limit and only fetching a subset.

Besides the memory issues, could it be that ORDER BY RAND() by itself is not the problem, but using it together with all the table joins of Magento? What if I preselect the random IDs with ORDER BY RAND()?

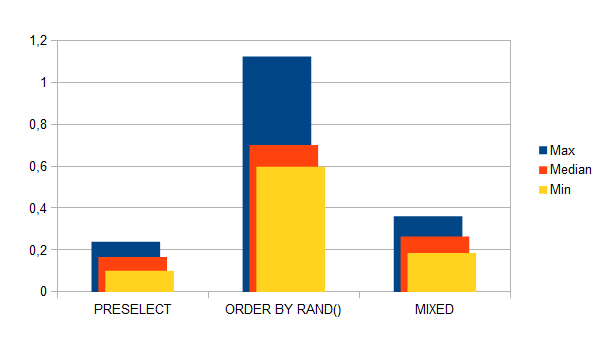

See how the “mixed” approach compares with the previous two:

It was slightly slower than the PHP preselect approach, but still clearly in favor of the pure order by rand and without the increased memory usage in PHP.

| minimum | mean | maximum | |

|---|---|---|---|

| Preselect | 0.10 | 0.17 | 0.24 |

| ORDER BY RAND() | 0.60 | 0.70 | 1.12 |

| Mixed | 0.19 | 0.26 | 0.36 |

More data!

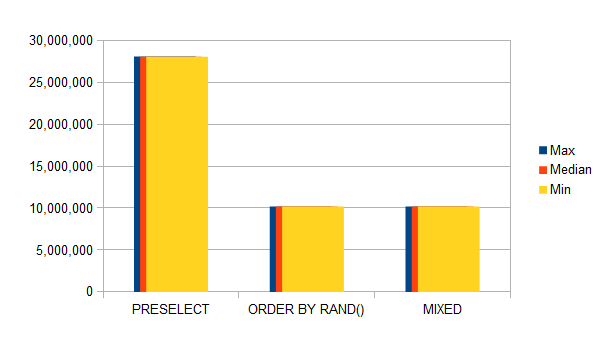

Let’s see, how enabling the flat catalog tables affect the result, which reduce the number of table joins for a product collection. Usually they are enabled in any decently sized catalog. Here are the results for the same database as above, with flat indexes turned on:

Memory usage was almost the same, but execution time went through the roof for the pure ORDER BY RAND() approach, while staying the same for the others.

| minimum | mean | maximum | |

|---|---|---|---|

| Preselect | 0.10 | 0.17 | 0.30 |

| ORDER BY RAND() | 11.78 | 21.78 | 47.09 |

| Mixed | 0.19 | 0.26 | 0.34 |

The problem of the pure MySQL approach with ORDER BY RAND() became even more evident. While monitoring MySQL with mytop I noticed that besides for sorting, lots of time is spent for copying. The problem here seems to be, that sorting without an index, as with ORDER BY RAND() copies the data to a temporary table and orders that. With the flat index, all product attributes are fetched from a single table, which increases the amount of data copied to and from the temporary table for sorting. I might be missing something else here, but the performance dropped from bad to horrible, and it even caused my Vagrantbox to crash at first try because its disk got full (40 GB). So while PHP uses less memory with this approach, MySQL is all the more resource hungry.

Different catalog sizes

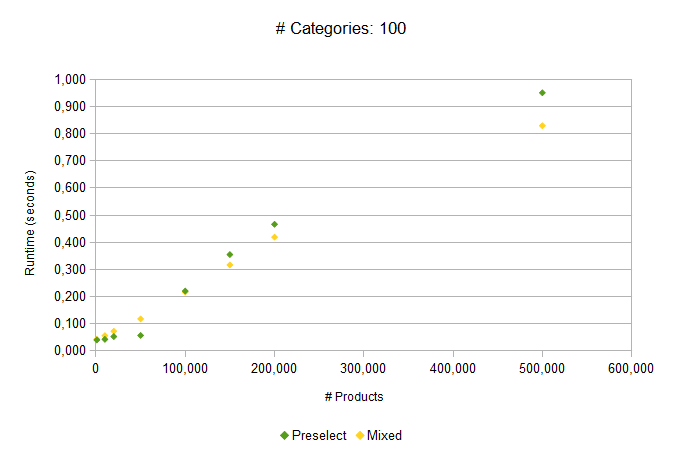

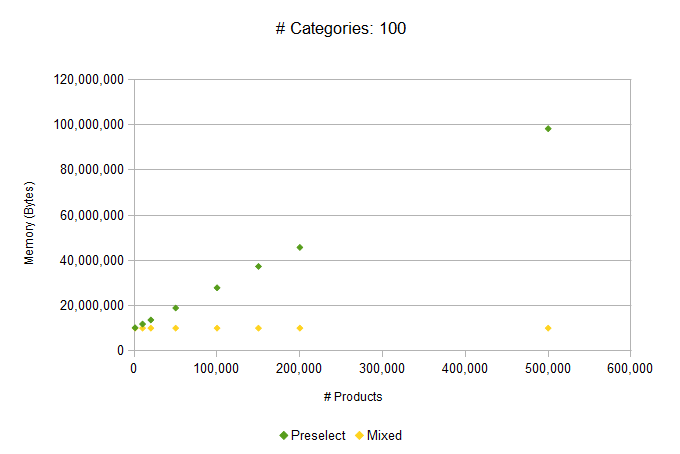

Now let’s run some tests with different catalog sizes to see if there are cases where the “mixed” and “preselect” approaches have a more significant difference.

100 Categories

| Mean Time (s) | Mean Memory (Bytes) | |||

|---|---|---|---|---|

| Products | Mixed | Preselect | Mixed | Preselect |

| 1,000 | 0.043 | 0.040 | 10,077,776 | 10,244,848 |

| 10,000 | 0.056 | 0.043 | 10,077,776 | 11,881,096 |

| 20,000 | 0.073 | 0.053 | 10,077,776 | 13,692,432 |

| 50,000 | 0.118 | 0.057 | 10,077,776 | 18,993,328 |

| 100,000 | 0.217 | 0.221 | 10,077,776 | 27,918,304 |

| 150,000 | 0.317 | 0.355 | 10,077,776 | 37,367,024 |

| 200,000 | 0.419 | 0.467 | 10,077,776 | 45,777,456 |

| 500,000 | 0.830 | 0.951 | 10,077,776 | 98,266,360 |

For small and medium sized catalogs with less than 100,000 products the preselect approach is faster, but PHP consumes much more memory. Somewhere between 50,000 and 100,000 it hits a wall and the mixed approach gets faster. It’s growing linear at least up to 500,000 products and stays constant in memory usage (of PHP).

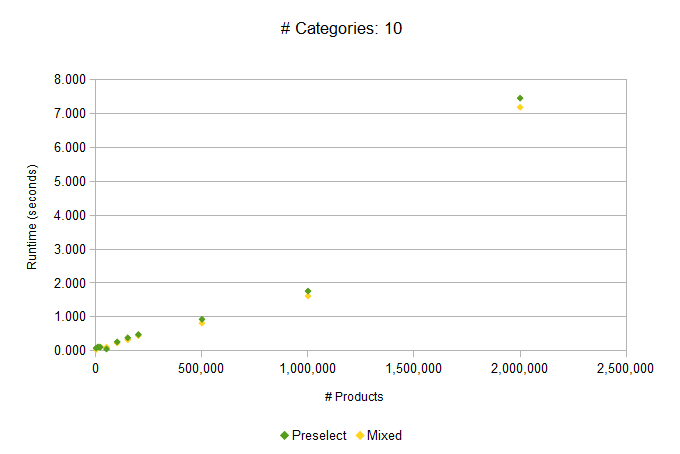

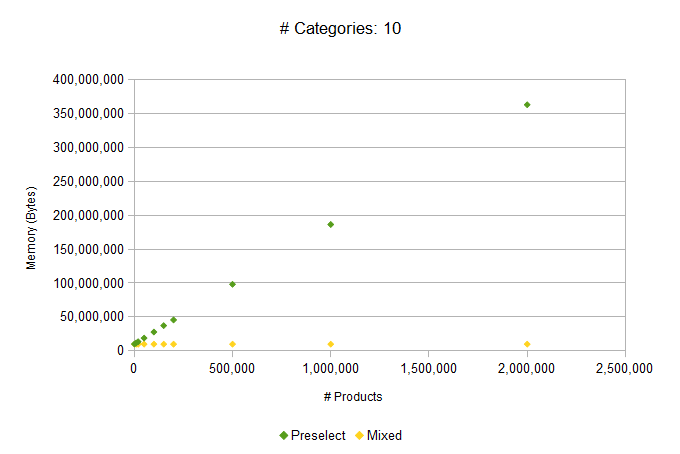

10 Categories

I ran the same tests with 10 categories to see if the number of categories make a difference, and also to have a test case for really small catalogs and really big catalogs up to 2 million products (with more categories or even multiple websites, I would have waited forever for the indexers).

These are the results:

| Mean Time (s) | Mean Memory (Bytes) | |||

|---|---|---|---|---|

| Products | Mixed | Preselect | Mixed | Preselect |

| 100 | 0.047 | 0.046 | 10,077,776 | 10,208,760 |

| 1,000 | 0.045 | 0.087 | 10,077,776 | 10,244,848 |

| 10,000 | 0.107 | 0.121 | 10,077,776 | 11,881,096 |

| 20,000 | 0.122 | 0.110 | 10,077,776 | 13,692,432 |

| 50,000 | 0.114 | 0.057 | 10,077,776 | 18,993,432 |

| 100,000 | 0.236 | 0.269 | 10,077,776 | 27,918,304 |

| 150,000 | 0.331 | 0.390 | 10,077,776 | 37,367,024 |

| 200,000 | 0.452 | 0.485 | 10,077,776 | 45,777,904 |

| 500,000 | 0.821 | 0.934 | 10,077,776 | 98,274,992 |

| 1,000,000 | 1.620 | 1.765 | 10,077,776 | 186,471,768 |

| 2,000,000 | 7.182 | 7.450 | 10,077,816 | 362,866,216 |

The trend is the same, but the mixed approach is already faster for smaller catalog sizes. But the time stays in the same order of magnitude as the preselect approach, so for big catalogs both are going to be too slow.

Conclusion

My recommendation based on these numbers would be:

- For small catalogs (< 10,000 products) you can use the preselect approach, which is simple and performant enough and is faster for more categories

- For medium sized catalogs (< 100,000 products) prefer the mixed approach

- For big catalogs you should look for something different.

- If you only need to pick random products from a small subset of the catalog (like from a single category), it is comparable with a catalog that is just as small. This is because the bottleneck is to load all candidates into a PHP array (“preselect” approach) or into a temporary table (“mixed” approach)

YMMV, so as always, run your own benchmarks on your catalog with your hardware to see what works for you.

But none of these approaches are really performant if you have a decent sized catalog. So what can we do?

Caching

First, make sure that you don’t load random products on every request. Use the cache. Even with a cache lifetime of just a few minutes, this will reduce load on a frequently visited page significantly. If you want to avoid showing the same products to the same user on a page refresh, you can cache multiple versions of the block using different cache keys and select one of the tags based on time, a counter in the session, or randomly.

The following method in a block caches 10 versions of the block and rotates them for each user. With a low cache lifetime most users will not see the same version twice:

public function getCacheKeyInfo()

{

$session = Mage::getSingleton('core/session');

$counter = $session->getData('random_counter');

$counter = ($counter + 1) % 10;

$session->setData('random_counter', $counter);

return array(

$this->getNameInLayout(),

$counter

);

}

Alternatives

Second, as stated above, for big catalogs you should look for something different. The problem with ORDER BY RAND() is that even though we minimized the data to be copied, it still copies all rows to a temporary table and generates a random number for each. The sorting itself is optimized to not sort all rows (See LIMIT Optimization), but copying takes its time.

There is another famous blog post on selecting random rows in MySQL written by Jan Kneschke. He suggests using an index table with all ids, that has its own primary key without gaps. This index table would be updated automatically with triggers, and random rows can be selected by the index table, using random keys between min(key) and max(key).

But this solution is built for random selection from the whole table without additional filters. For product collections in Magento filters are essential, at least the visibility filter. The index table only uses its potential if I can select any random number in the key range and this does not work with filters.

A solution that I could imagine, is to create one index table for each filter that is needed. If you don’t have countless random blocks with different filters, this could work. Of course, these filtered index tables cannot be automatically updated with triggers on catalog_product_entity like a complete index table, but I’m sure, that it can be achieved using the indexer mechanisms of Magento, especially the partial indexer of Magento Enterprise.

Code

After all these numbers, have some code. Below are complete examples to load a product collection with either of the presented approaches.

The “preselect” approach

$numberOfItems = 4;

$productCollection = Mage::getModel('catalog/product')->getCollection();

$productCollection

->addStoreFilter()

->setVisibility(Mage::getSingleton('catalog/product_visibility')->getVisibleInCatalogIds());

$candidateIds = $productCollection->getAllIds();

$numberOfProducts = count($candidateIds);

$chosenIds = [];

while ($numberOfItems) {

$randomKey = mt_rand(0, $numberOfProducts - 1);

if (!isset($chosenIds[$randomKey])) {

chosenIds[$randomKey] = $candidateIds[$randomKey];

--$numberOfItems;

}

}

$productCollection->addIdFilter($chosenIds);

$productCollection

->addMinimalPrice()

->addFinalPrice()

->addTaxPercents()

->addAttributeToSelect(Mage::getSingleton('catalog/config')->getProductAttributes())

->addUrlRewrite();

$productCollection->load();

The “mixed” approach

The construction of $idSelect is a bit cumbersome and there is certainly a more elegant way. I could not reuse getAllIds() because it resets the ORDER clause, so copied the internals, but without the reset:

$numberOfItems = 4;

// Step 1: Preselect ids using ORDER BY RAND()

// Filters are applied here

$productCollection = Mage::getModel('catalog/product')

->getCollection();

$productCollection

->addStoreFilter()

->setVisibility(Mage::getSingleton('catalog/product_visibility')->getVisibleInCatalogIds());

$productCollection->getSelect()->order('RAND()');

$idsSelect = clone $productCollection->getSelect();

$idsSelect->reset(Zend_Db_Select::LIMIT_COUNT);

$idsSelect->reset(Zend_Db_Select::LIMIT_OFFSET);

$idsSelect->reset(Zend_Db_Select::COLUMNS);

$idsSelect->columns('e.' . $productCollection->getEntity()->getIdFieldName());

$idsSelect->limit($numberOfItems, null);

$idsSelect->resetJoinLeft();

$accessor = new ReflectionObject($productCollection);

$_bindParams = $accessor->getProperty('_bindParams');

$_bindParams->setAccessible(true);

$chosenIds = $productCollection->getConnection()

->fetchCol($idsSelect, $_bindParams->getValue($productCollection));

// Step 2: Load products

// Attributes and index data are joined here

$productCollection->addIdFilter($chosenIds);

$productCollection

->addMinimalPrice()

->addFinalPrice()

->addTaxPercents()

->addAttributeToSelect(Mage::getSingleton('catalog/config')->getProductAttributes())

->addUrlRewrite();

$productCollection->load();

Notes:

- For example in this Magento StackExchange post: Four Random Products on Homepage ↩

Thanks for sharing, it’s a pleasure to read such a well researched topic!

Hello,

I have used your preselect approach code directly within a template phtml file, working great. But, from my limited understanding it is not the ‘correct’ way. Could you guide me to creating a custom module for this?

You would be helping in my learning, and i’d be thoroughly grateful.

Many Thanks,

Vish